SKYSPIN

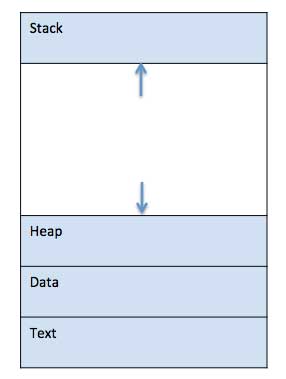

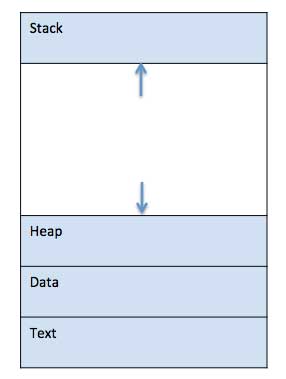

When a program is loaded into the memory and it becomes a process, it can be divided into four sections ─ stack, heap, text and data. The following image shows a simplified layout of a process inside main memory −

A part of a computer program that performs a well-defined task is known as an algorithm. A collection of computer programs, libraries and related data are referred to as a software.

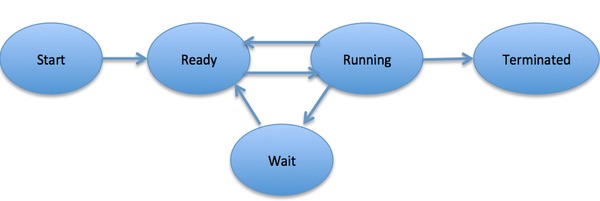

In general, a process can have one of the following five states at a time.

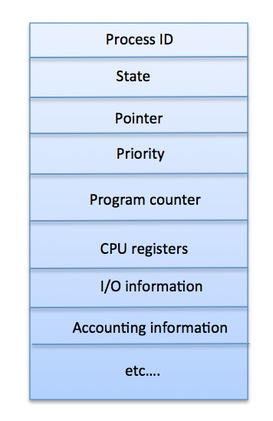

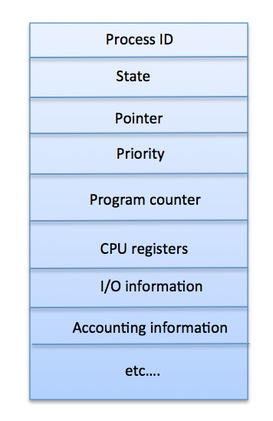

The architecture of a PCB is completely dependent on Operating System and may contain different information in different operating systems. Here is a simplified diagram of a PCB −

The PCB is maintained for a process throughout its lifetime, and is deleted once the process terminates.

The PCB is maintained for a process throughout its lifetime, and is deleted once the process terminates.

Process scheduling is an essential part of a Multiprogramming operating systems. Such operating systems allow more than one process to be loaded into the executable memory at a time and the loaded process shares the CPU using time multiplexing.

The Operating System maintains the following important process scheduling queues −

The OS can use different policies to manage each queue (FIFO, Round

Robin, Priority, etc.). The OS scheduler determines how to move

processes between the ready and run queues which can only have one entry

per processor core on the system; in the above diagram, it has been

merged with the CPU.

The OS can use different policies to manage each queue (FIFO, Round

Robin, Priority, etc.). The OS scheduler determines how to move

processes between the ready and run queues which can only have one entry

per processor core on the system; in the above diagram, it has been

merged with the CPU.

UNIT-2(PROCESS MANAGEMENT)

Process

A process is basically a program in execution. The execution of a process must progress in a sequential fashion.A process is defined as an entity which represents the basic unit of work to be implemented in the system.To put it in simple terms, we write our computer programs in a text file and when we execute this program, it becomes a process which performs all the tasks mentioned in the program.

When a program is loaded into the memory and it becomes a process, it can be divided into four sections ─ stack, heap, text and data. The following image shows a simplified layout of a process inside main memory −

Program

A program is a piece of code which may be a single line or millions of lines. A computer program is usually written by a computer programmer in a programming language. For example, here is a simple program written in C programming language −#include <stdio.h> int main() { printf("Hello, World! \n"); return 0; }A computer program is a collection of instructions that performs a specific task when executed by a computer. When we compare a program with a process, we can conclude that a process is a dynamic instance of a computer program.

A part of a computer program that performs a well-defined task is known as an algorithm. A collection of computer programs, libraries and related data are referred to as a software.

Process Life Cycle

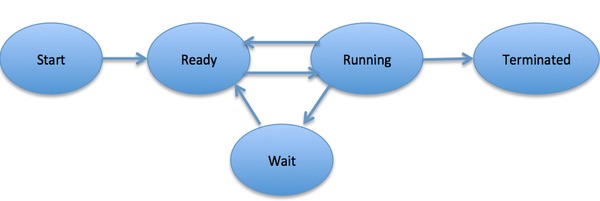

When a process executes, it passes through different states. These stages may differ in different operating systems, and the names of these states are also not standardized.In general, a process can have one of the following five states at a time.

Process Control Block (PCB)

A Process Control Block is a data structure maintained by the Operating System for every process. The PCB is identified by an integer process ID (PID). A PCB keeps all the information needed to keep track of a process as listed below in the table −The architecture of a PCB is completely dependent on Operating System and may contain different information in different operating systems. Here is a simplified diagram of a PCB −

The PCB is maintained for a process throughout its lifetime, and is deleted once the process terminates.

The PCB is maintained for a process throughout its lifetime, and is deleted once the process terminates.Operating System - Process Scheduling

Definition

The process scheduling is the activity of the process manager that handles the removal of the running process from the CPU and the selection of another process on the basis of a particular strategy.Process scheduling is an essential part of a Multiprogramming operating systems. Such operating systems allow more than one process to be loaded into the executable memory at a time and the loaded process shares the CPU using time multiplexing.

Process Scheduling Queues

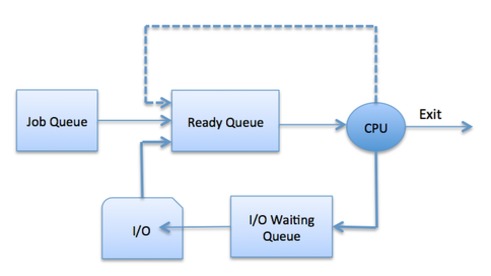

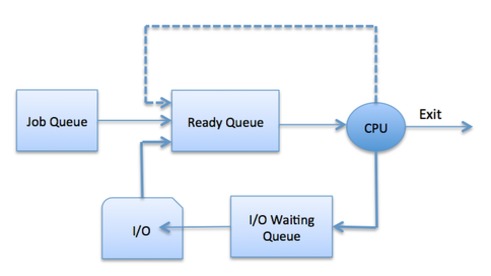

The OS maintains all PCBs in Process Scheduling Queues. The OS maintains a separate queue for each of the process states and PCBs of all processes in the same execution state are placed in the same queue. When the state of a process is changed, its PCB is unlinked from its current queue and moved to its new state queue.The Operating System maintains the following important process scheduling queues −

- Job queue − This queue keeps all the processes in the system.

- Ready queue − This queue keeps a set of all processes residing in main memory, ready and waiting to execute. A new process is always put in this queue.

- Device queues − The processes which are blocked due to unavailability of an I/O device constitute this queue.

The OS can use different policies to manage each queue (FIFO, Round

Robin, Priority, etc.). The OS scheduler determines how to move

processes between the ready and run queues which can only have one entry

per processor core on the system; in the above diagram, it has been

merged with the CPU.

The OS can use different policies to manage each queue (FIFO, Round

Robin, Priority, etc.). The OS scheduler determines how to move

processes between the ready and run queues which can only have one entry

per processor core on the system; in the above diagram, it has been

merged with the CPU.Two-State Process Model

Two-state process model refers to running and non-running states which are described below −SchedulersSchedulers are special system software which handle process scheduling in various ways. Their main task is to select the jobs to be submitted into the system and to decide which process to run. Schedulers are of three types −

Long Term SchedulerIt is also called a job scheduler. A long-term scheduler determines which programs are admitted to the system for processing. It selects processes from the queue and loads them into memory for execution. Process loads into the memory for CPU scheduling.The primary objective of the job scheduler is to provide a balanced mix of jobs, such as I/O bound and processor bound. It also controls the degree of multiprogramming. If the degree of multiprogramming is stable, then the average rate of process creation must be equal to the average departure rate of processes leaving the system. On some systems, the long-term scheduler may not be available or minimal. Time-sharing operating systems have no long term scheduler. When a process changes the state from new to ready, then there is use of long-term scheduler. Short Term SchedulerIt is also called as CPU scheduler. Its main objective is to increase system performance in accordance with the chosen set of criteria. It is the change of ready state to running state of the process. CPU scheduler selects a process among the processes that are ready to execute and allocates CPU to one of them.Short-term schedulers, also known as dispatchers, make the decision of which process to execute next. Short-term schedulers are faster than long-term schedulers. Medium Term SchedulerMedium-term scheduling is a part of swapping. It removes the processes from the memory. It reduces the degree of multiprogramming. The medium-term scheduler is in-charge of handling the swapped out-processes.A running process may become suspended if it makes an I/O request. A suspended processes cannot make any progress towards completion. In this condition, to remove the process from memory and make space for other processes, the suspended process is moved to the secondary storage. This process is called swapping, and the process is said to be swapped out or rolled out. Swapping may be necessary to improve the process mix. Comparison among Scheduler |

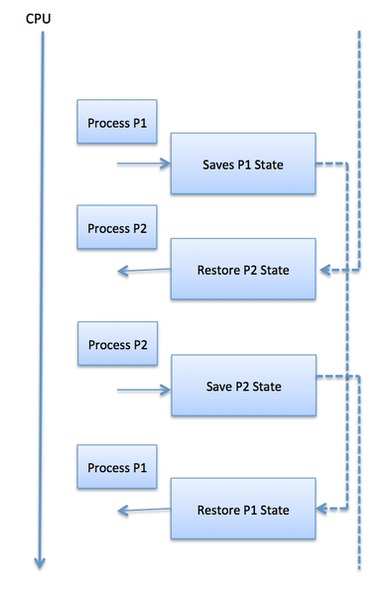

|||

Context SwitchA context switch is the mechanism to store and restore the state or context of a CPU in Process Control block so that a process execution can be resumed from the same point at a later time. Using this technique, a context switcher enables multiple processes to share a single CPU. Context switching is an essential part of a multitasking operating system features.When the scheduler switches the CPU from executing one process to execute another, the state from the current running process is stored into the process control block. After this, the state for the process to run next is loaded from its own PCB and used to set the PC, registers, etc. At that point, the second process can start executing.  Context switches are computationally intensive since register and

memory state must be saved and restored. To avoid the amount of context

switching time, some hardware systems employ two or more sets of

processor registers. When the process is switched, the following

information is stored for later use.

Context switches are computationally intensive since register and

memory state must be saved and restored. To avoid the amount of context

switching time, some hardware systems employ two or more sets of

processor registers. When the process is switched, the following

information is stored for later use.

|

|||

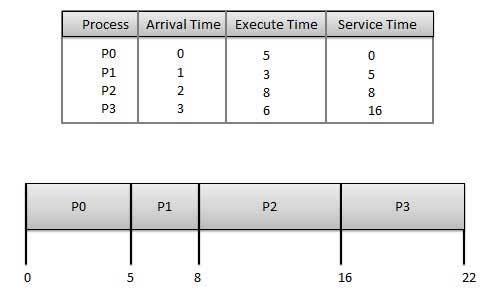

Waiting time of each process is as follows − |

|||

Average Wait Time: (0 + 4 + 12 + 5)/4 = 21 / 4 = 5.25Priority Based Scheduling

|

|||

Waiting time of each process is as follows − Average Wait Time: (0 + 10 + 12 + 2)/4 = 24 / 4 = 6 Shortest Remaining Time

Round Robin Scheduling

Average Wait Time: (9+2+12+11) / 4 = 8.5 Multiple-Level Queues SchedulingMultiple-level queues are not an independent scheduling algorithm. They make use of other existing algorithms to group and schedule jobs with common characteristics.

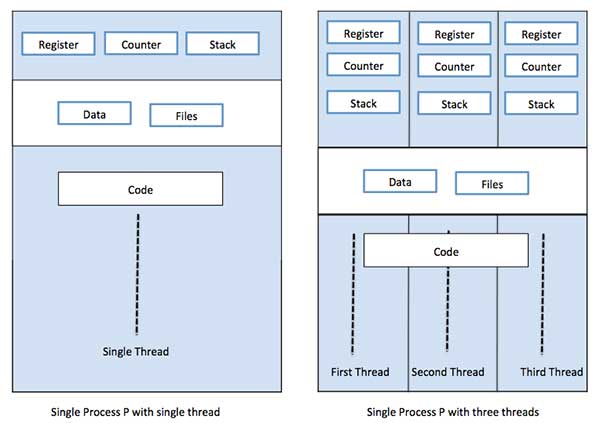

What is Thread?A thread is a flow of execution through the process code, with its own program counter that keeps track of which instruction to execute next, system registers which hold its current working variables, and a stack which contains the execution history.A thread shares with its peer threads few information like code segment, data segment and open files. When one thread alters a code segment memory item, all other threads see that. A thread is also called a lightweight process. Threads provide a way to improve application performance through parallelism. Threads represent a software approach to improving performance of operating system by reducing the overhead thread is equivalent to a classical process. Each thread belongs to exactly one process and no thread can exist outside a process. Each thread represents a separate flow of control. Threads have been successfully used in implementing network servers and web server. They also provide a suitable foundation for parallel execution of applications on shared memory multiprocessors. The following figure shows the working of a single-threaded and a multithreaded process.

Difference between Process and ThreadAdvantages of Thread

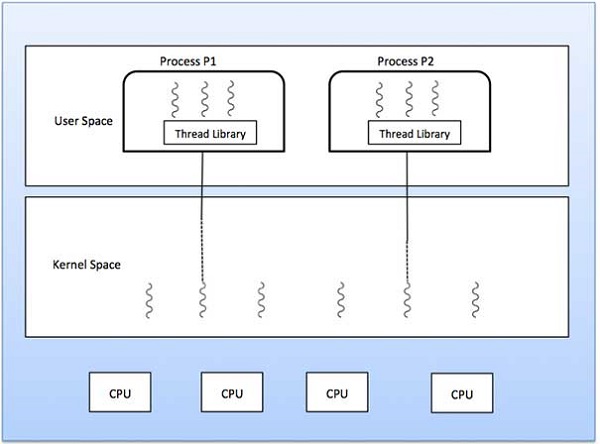

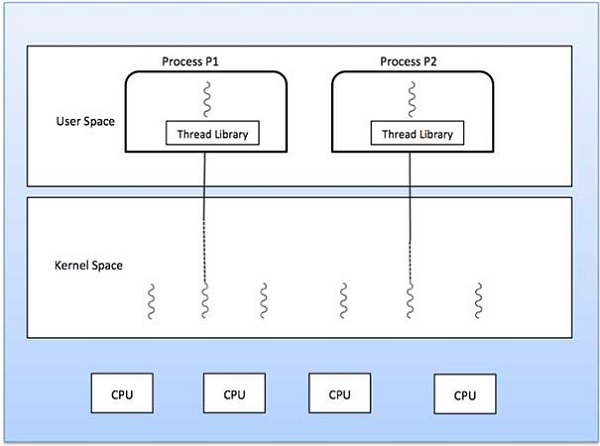

Types of ThreadThreads are implemented in following two ways −

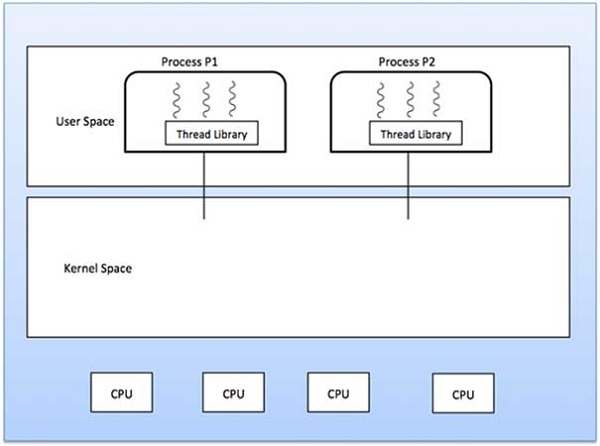

User Level ThreadsIn this case, the thread management kernel is not aware of the existence of threads. The thread library contains code for creating and destroying threads, for passing message and data between threads, for scheduling thread execution and for saving and restoring thread contexts. The application starts with a single thread.

Advantages

Disadvantages

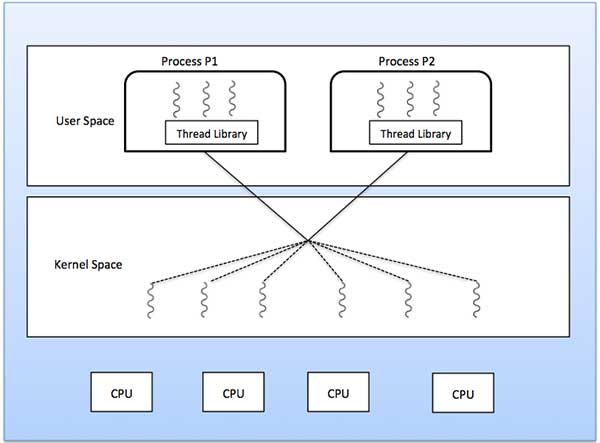

Kernel Level ThreadsIn this case, thread management is done by the Kernel. There is no thread management code in the application area. Kernel threads are supported directly by the operating system. Any application can be programmed to be multithreaded. All of the threads within an application are supported within a single process.The Kernel maintains context information for the process as a whole and for individuals threads within the process. Scheduling by the Kernel is done on a thread basis. The Kernel performs thread creation, scheduling and management in Kernel space. Kernel threads are generally slower to create and manage than the user threads. Advantages

Disadvantages

Multithreading ModelsSome operating system provide a combined user level thread and Kernel level thread facility. Solaris is a good example of this combined approach. In a combined system, multiple threads within the same application can run in parallel on multiple processors and a blocking system call need not block the entire process. Multithreading models are three types

Many to Many ModelThe many-to-many model multiplexes any number of user threads onto an equal or smaller number of kernel threads.The following diagram shows the many-to-many threading model where 6 user level threads are multiplexing with 6 kernel level threads. In this model, developers can create as many user threads as necessary and the corresponding Kernel threads can run in parallel on a multiprocessor machine. This model provides the best accuracy on concurrency and when a thread performs a blocking system call, the kernel can schedule another thread for execution.

Many to One ModelMany-to-one model maps many user level threads to one Kernel-level thread. Thread management is done in user space by the thread library. When thread makes a blocking system call, the entire process will be blocked. Only one thread can access the Kernel at a time, so multiple threads are unable to run in parallel on multiprocessors.If the user-level thread libraries are implemented in the operating system in such a way that the system does not support them, then the Kernel threads use the many-to-one relationship modes.

One to One ModelThere is one-to-one relationship of user-level thread to the kernel-level thread. This model provides more concurrency than the many-to-one model. It also allows another thread to run when a thread makes a blocking system call. It supports multiple threads to execute in parallel on microprocessors.Disadvantage of this model is that creating user thread requires the corresponding Kernel thread. OS/2, windows NT and windows 2000 use one to one relationship model.

Difference between User-Level & Kernel-Level ThreadProcess CreationA process may be created in the system for different operations. Some of the events that lead to process creation are as follows −

A diagram that demonstrates process creation using fork() is as follows: .PNG) Process TerminationProcess termination occurs when the process is terminated The exit() system call is used by most operating systems for process termination.Some of the causes of process termination are as follows −

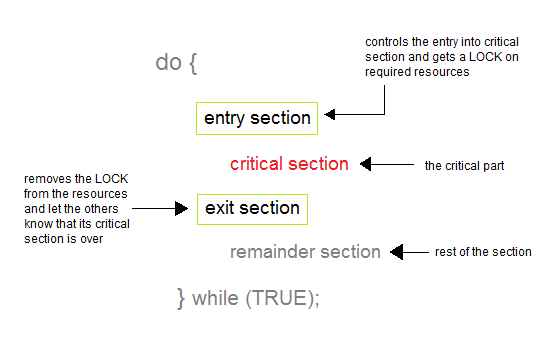

Process SynchronizationProcess Synchronization means sharing system resources by processes in a such a way that, Concurrent access to shared data is handled thereby minimizing the chance of inconsistent data. Maintaining data consistency demands mechanisms to ensure synchronized execution of cooperating processes.Process Synchronization was introduced to handle problems that arose while multiple process executions. Some of the problems are discussed below. Critical Section ProblemA Critical Section is a code segment that accesses shared variables and has to be executed as an atomic action. It means that in a group of cooperating processes, at a given point of time, only one process must be executing its critical section. If any other process also wants to execute its critical section, it must wait until the first one finishes. Solution to Critical Section ProblemA solution to the critical section problem must satisfy the following three conditions:1. Mutual ExclusionOut of a group of cooperating processes, only one process can be in its critical section at a given point of time.2. ProgressIf no process is in its critical section, and if one or more threads want to execute their critical section then any one of these threads must be allowed to get into its critical section.3. Bounded WaitingAfter a process makes a request for getting into its critical section, there is a limit for how many other processes can get into their critical section, before this process's request is granted. So after the limit is reached, system must grant the process permission to get into its critical section.Synchronization HardwareMany systems provide hardware support for critical section code. The critical section problem could be solved easily in a single-processor environment if we could disallow interrupts to occur while a shared variable or resource is being modified.In this manner, we could be sure that the current sequence of instructions would be allowed to execute in order without pre-emption. Unfortunately, this solution is not feasible in a multiprocessor environment. Disabling interrupt on a multiprocessor environment can be time consuming as the message is passed to all the processors. This message transmission lag, delays entry of threads into critical section and the system efficiency decreases. Mutex LocksAs the synchronization hardware solution is not easy to implement for everyone, a strict software approach called Mutex Locks was introduced. In this approach, in the entry section of code, a LOCK is acquired over the critical resources modified and used inside critical section, and in the exit section that LOCK is released.As the resource is locked while a process executes its critical section hence no other process can access it. Introduction to SemaphoresIn 1965, Dijkstra proposed a new and very significant technique for managing concurrent processes by using the value of a simple integer variable to synchronize the progress of interacting processes. This integer variable is called semaphore. So it is basically a synchronizing tool and is accessed only through two low standard atomic operations, wait and signal designated byP(S) and V(S) respectively.In very simple words, semaphore is a variable which can hold only a non-negative Integer value, shared between all the threads, with operations wait and signal, which work as follow:

Properties of Semaphores

Types of SemaphoresSemaphores are mainly of two types:

Example of UseHere is a simple step wise implementation involving declaration and usage of semaphore.Limitations of Semaphores

Classical Problems of SynchronizationIn this tutorial we will discuss about various classic problem of synchronization.Semaphore can be used in other synchronization problems besides Mutual Exclusion. Below are some of the classical problem depicting flaws of process synchronaization in systems where cooperating processes are present. We will discuss the following three problems:

Bounded Buffer Problem

Dining Philosophers Problem

The Readers Writers Problem

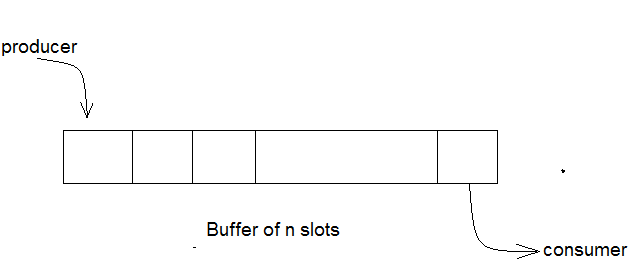

Bounded Buffer ProblemBounded buffer problem, which is also called producer consumer problem, is one of the classic problems of synchronization. Let's start by understanding the problem here, before moving on to the solution and program code.What is the Problem Statement?There is a buffer ofn slots and each slot is capable of storing one unit of data. There are two processes running, namely, producer and consumer, which are operating on the buffer.

Bounded Buffer Problem

A producer tries to insert data into an empty slot of the buffer. A consumer tries to remove data from a filled slot in the buffer. As you might have guessed by now, those two processes won't produce the expected output if they are being executed concurrently. There needs to be a way to make the producer and consumer work in an independent manner. Here's a SolutionOne solution of this problem is to use semaphores. The semaphores which will be used here are:

The Producer OperationThe pseudocode of the producer function looks like this:

The Consumer OperationThe pseudocode for the consumer function looks like this:

Dining Philosophers ProblemThe dining philosophers problem is another classic synchronization problem which is used to evaluate situations where there is a need of allocating multiple resources to multiple processes.What is the Problem Statement?Consider there are five philosophers sitting around a circular dining table. The dining table has five chopsticks and a bowl of rice in the middle as shown in the below figure.

Dining Philosophers Problem

At any instant, a philosopher is either eating or thinking. When a philosopher wants to eat, he uses two chopsticks - one from their left and one from their right. When a philosopher wants to think, he keeps down both chopsticks at their original place. Here's the SolutionFrom the problem statement, it is clear that a philosopher can think for an indefinite amount of time. But when a philosopher starts eating, he has to stop at some point of time. The philosopher is in an endless cycle of thinking and eating.An array of five semaphores, stick[5], for each of the five chopsticks.The code for each philosopher looks like: But if all five philosophers are hungry simultaneously, and each of them pickup one chopstick, then a deadlock situation occurs because they will be waiting for another chopstick forever. The possible solutions for this are:

What is Readers Writer Problem?Readers writer problem is another example of a classic synchronization problem. There are many variants of this problem, one of which is examined below.The Problem StatementThere is a shared resource which should be accessed by multiple processes. There are two types of processes in this context. They are reader and writer. Any number of readers can read from the shared resource simultaneously, but only one writer can write to the shared resource. When a writer is writing data to the resource, no other process can access the resource. A writer cannot write to the resource if there are non zero number of readers accessing the resource at that time.The SolutionFrom the above problem statement, it is evident that readers have higher priority than writer. If a writer wants to write to the resource, it must wait until there are no readers currently accessing that resource.Here, we use one mutex m and a semaphore w. An integer variable read_count is used to maintain the number of readers currently accessing the resource. The variable read_count is initialized to 0. A value of 1 is given initially to m and w. Instead of having the process to acquire lock on the shared resource, we use the mutex m to make the process to acquire and release lock whenever it is updating the read_count variable. The code for the writer process looks like this: And, the code for the reader process looks like this: Here is the Code uncoded(explained)

|

Wait time of each process is as follows −

Wait time of each process is as follows −

0 Comments